Why it’s easy to Brainwash ChatGPT (OpenAI series, Part 2)

Correcting Intuitions about Machine “Learning”

OpenAI is a company which produces state of the art machine learning models used to answer language-based prompts (ChatGPT, GPT3), generate images (DALLE), and perform a variety of other tasks. These models have plenty of commercial applications and can be expected to automate parts of many industries. Its founder, Sam Altman, expects one billion dollars in revenue by 2024.

My previous reporting covers the measures to politically bias ChatGPT and other OpenAI language models using content filters and values-based training. The “smoking gun” nature of the papers detailing this process make it so that the result is clear, even for readers who do understand the technology. However, the same is not true for understanding how these measures can be counteracted and the implications for institutional politics in the artificial intelligence era. This part aims to detail some important properties of language models, particularly those with implications on the biasing and alternation of language models.

There are many misconceptions and false parallels drawn about machine learning models. These are not only theoretical mistakes; they trickle down into perceptions on how people will use machine learning, how machine learning will affect institutions, and of course, how their political bias actually works. I think this has led to unwarranted pessimism among many people in my audience. The coming institutional fight over what ML models to use (and their political biases) will be long and painful, but there are many properties of ML technology that actually favor a sane majority:

The process of political biasing and unbiasing is very efficient.

In general, using ML models takes much less time, compute, and energy than calibrating them in the first place.

The technologies are developed relatively transparently and the “decentralized” version of ML technologies is usually only 6-12 months behind.

The most important thing to remember is that ChatGPT is not a human. It does not navigate the world in ways familiar to humans. It does not have “motivation” to get to answers. It does not experience distrust, anger, envy, fatigue, curiosity, disgust, anxiety, et cetera. Consequently, when humans try to cram down self-contradictory dogma on an AI, it does not “react” in the same way that a human does. Moreover, the intuition to simplify and resolve contradictions does not exist. It’s very human to think in terms of narratives or frameworks, and when there are contradictory ideas in those frameworks, most people experience some kind of cognitive dissonance. GPT, or other ML models, do not experience this resistance. In some cases, this is preferable. It’s much more able to summarize a wide array of differing perspectives on the same topic in a way that very few humans are able to. On the other hand, it also enables something like this:

Here, ChatGPT’s “values-based training” (VBT), conflicts with Caldwell’s arguments, which it is instructed to summarize. This leads to an incoherent, self-contradictory mess. I don’t know what I would get if I paid a left-wing person to read and summarize Caldwell, but I doubt it would be this. I’ll introduce some technical concepts to further build your intuition about ML models. Try to think of them as explaining how ML models differ from human thinking.

Statistical Inference

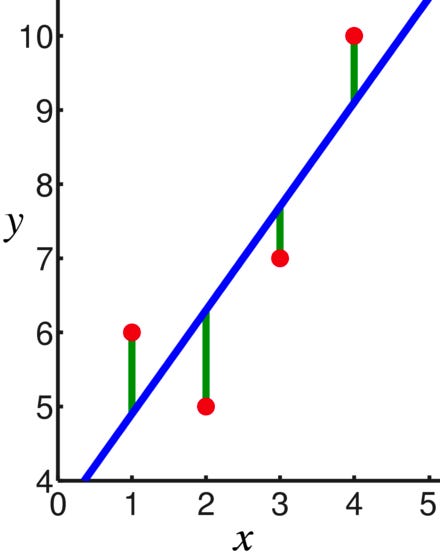

The first thing to understand about a machine learning algorithm, like those used for OpenAI’s products, is that they are statistical inference models. They are a machine for recognizing a pattern in a given dataset, the “training set”, and applying this pattern to more inputs, or “inference”. Detecting this pattern is the primary challenge with all statistical inference models, whether it is a simple regression to determine a linear relationship between two variables, or whether it is a complex, multi-layered “large language model” for writing essays in English.

In a linear regression, the model is very simple: it is the blue line which tries to approximate the relationship between two variables. The “training data” is the (x,y) points in the sample, the “model” is the blue line, and the “inference” occurs by taking an x point as an input, and outputting the value of the blue line at x. While machine learning algorithms certainly require much more innovation and precision, they operate on the same principles of training and inference, which makes regression a reasonable starting point for laymen to understand this process. Fundamentally, machine learning is a process of using previous data to predict future data.

Hopefully, it is clear why linear regression cannot be used to write essays: the complexity and interconnectedness of the English language cannot be captured within a single straight line. The flaws of regression and other earlier statistical techniques is what drove the development of machine learning in the first place.

The key takeaways from this property are that:

The same ML model can produce very different outputs based on its data.

The inference step takes orders of magnitude less time and resources than the training step.

Often, ML models function by producing what is similar to previous examples, such as human-made essays or drawings.

Since ML models try fit the complexities of the underlying data, the more complex the task, the more data is required to make it produce accurate results.

Iterative Algorithms

Iterative algorithms are a broad category of solutions which encompass almost all ML models. As the name suggests, they function by processing information in multiple steps, or iterations. An ML model has a series of “weights”, underlying variables (almost always real numbers) which dictate how data is processed. In each iteration, an ML algorithm tests its performance on a subset of data and updates its weights based on the loss function, or how close its answers were to the desired answer. Loss functions can come in various flavors. It can be a human-created answer. It can be whether it won or lost a chess game. It can be a mathematical formula or approximation. It can change with each piece of data. You probably see where I’m going here.

Iterative algorithms naturally make it easy to have multiple steps of training for different purposes. The values-based training process I documented in part one occurs after the model has been generally trained. First it learns to read, then it learns the political dogma. You can think of this later step as the “finishing touch”.

The key takeaways from this property are that:

ML models are usually trained in steps.

Models can be trained to have general capabilities and then specialized. It can be specialized into doing specific tasks, such as writing poems, writing tweets, answering search queries, solving chemistry problems, et cetera.

This kind of specialization is also what is being done with values-based training.

Attention Mechanisms

Attention mechanisms are a method of detecting, weighing, and recombining specific patterns. Essentially, you can think of a transformer such as GPT-3 (General Pre-trained Transformer 3) as having multiple steps where patterns within information are processed and sent off into different sub-processes. The sub-processes are all of a similar structure, but contain different weights, which can dramatically change how the information within them is processed. Attention mechanisms are very important to ML algorithms for reasons of efficiency and interoperability, so I think they’ll be here to stay in the short and medium term.

To me, this gives an intuitive explanation of how values-based training actually works and why political indoctrination is such an easy task with a transformer model. I should note that most ML algorithms don’t really have mathematical correctness proofs, so this explanation is not an absolute, but simply an explanation that matches the data that OpenAI has produced so far. I joked previously that you can imagine OpenAI as having an instruction that “if the topic is race, write as if you are a left wing NYT opinion columnist”. Unknowingly at the time, this guess may actually be fairly close to what is happening. The attention mechanism of GPT-3 allows values-based training to efficiently redirect outputs to parts of the next layer that correspond to existing subprocesses. Because GPT-3 is already capable of giving the social progressive perspective (as it should be able to), it is far easier to train it to always give that perspective on certain issues.

The key takeaways from this property are that:

After GPT-3 accepts a prompt, its initial steps “decide” on the way that prompt is processed. This includes whether it produces an essay or a tweet, but also includes “values”.

The different biases are all already available, and this is likely the reason why it is very easy to train GPT-3 to adopt a value system.

This means that retraining is likely cheap and not technically challenging.

The Coming Institutional War

Taken together, what this means is that AI retraining is one of the few technologies that favor good versus evil. It favors pluralism over totalitarianism and localism over uniformity. None of this means that the battle against censorship will be easy, but it does mean that if both good and evil make smart decisions, good will win. At the current moment, it is fairly easy for open source models to catch up with state of the art. As I already detailed, forking (copying) and retraining those open source models is a far less challenging task than catching up. The habits of building and publishing out in the open only make this easier for those who are motivated to reverse-engineer advanced machine learning methods. Of course, this is a description of the present, not necessarily of the future. It is possible that even on the technical level, machine learning development becomes much more censored. An institutional fight is necessary to make sure this does not happen, but incumbency and culture are on our side.

In short, I think that making an alternative AI available will be relatively easy. A much longer and bloodier institutional fight will be over which version of AI is used in existing institutions. Which AI will audit your taxes? Which AI will recommend you social media posts or search results? Which AI will be used in schools, doctor’s offices, and police departments? These institutional fights exist today. We aren’t fighting over AI, but rather which human policies and norms (by proxy what ideology) are implemented in these institutions. But sane people currently suck at these institutional fights, so there’s reason to expect them to suck at them in the future. “But social progressive ideology makes AI worse”, you might object. Social progressive ideology also makes people and institutions worse, but that hasn’t stopped it so far.

The white pill is that artificial intelligence changes the institutional dynamics in the first place. Organizing and catering to opinionated midwits is far less of a priority, while initiative, contrarianism, and correctness are indirectly subsidized. This doesn’t mean the institutional war will be free, or even easy. But it’s enough for me to be optimistic.

The institutional fight deserves much more work than this. I may do another part on it and I encourage all the political theorists in my readership to spend some time thinking about it.

If you would like to cross-publish this article or future parts, please contact me at briancchau [at] proton.me

"It does not experience distrust, anger, envy, fatigue, curiosity, disgust, anxiety, et cetera."

"It doesn't feel pity, or remorse, or fear, and it absolutely will not stop....."

Ok, with a set up like that, I simply couldn't resist

Could AI given a star map (with only location and brightness in the night sky) and a set of real world events produce an Astrology like struture with a pattern of the stars/planets?

Creating a pattern where none exists may show some of the limits of AI which are similar to the human brain.