Why You’re Wrong About AI Data

Most people believe data legibility is a moat; in reality it guarantees no moat.

Today, hundreds if not thousands of venture backed startups go to their investors and tell them that data is their moat. As the story goes, “I’ll be the first to collect data on a shadowy crevice of business plumbing and because of that, I’ll have a permanent advantage over any competitors.”

Legibility is the foundation of their companies. “Because LLMs will drastically lower the cost of information, reading that information to begin with provides a huge advantage,” the stories continue. That’s all great in theory. Their proponents even have a few recent examples to justify their argument. Take human feedback data for ChatGPT or indexed search data for perplexity. Specialized data does enable real product breakthroughs — that much is correct. In 2022 or 2023, data moats appeared strong. Think about what that specialized data accomplished. In the case of ChatGPT, it created a “friendly assistant” persona that helps users interact with LLMs in a more intuitive way. In the case of Perplexity, it enabled LLMs to browse the internet and other large datasets.

Both of those are important breakthroughs, but if you’ve tried a selection of AI tools recently, you’ll notice that they are the opposite of a monopoly. Every major AI lab has successfully copied the “friendly assistant” persona. Almost all major AI labs now have a search integration. Even in the cases where specialized data created the most value, the features created by that specialized data spread far and wide — they became a de-facto industry standard.

To understand why people expected specialized data to be a moat, rather than a commodity, we have to return to the original data moat thesis. Originally, raw quantity of data was one piece of assembling a runaway “god model”, which was believed to become a natural monopoly for whoever created it first. This thesis collapsed as all AI companies suffered diminishing returns from scaling.

Afterwards, the data narrative flipped from quantity to quality. Specialized data became more valuable and a new data narrative emerged — that AI models would commoditize all repetitive work and the new data moat was turning unreadable, “soft” workflows into readable, automatable workflows. This data thesis had a more realistic expectation of AI model abilities, but blindly copied assumptions about data from the god-model system. In the god-model system, the role of data was to bootstrap a positive feedback loop that would develop into a runaway AI — a natural monopoly. In the modern system, the role of data was to enable moderately-intelligent AI models to do repetitive work on previously inaccessible systems. In the god-model system, data created a moat because of a short-term singularity, not something intrinsic to data itself. In the modern system, data is part of a long-term business equilibrium, which creates a moat because … ?

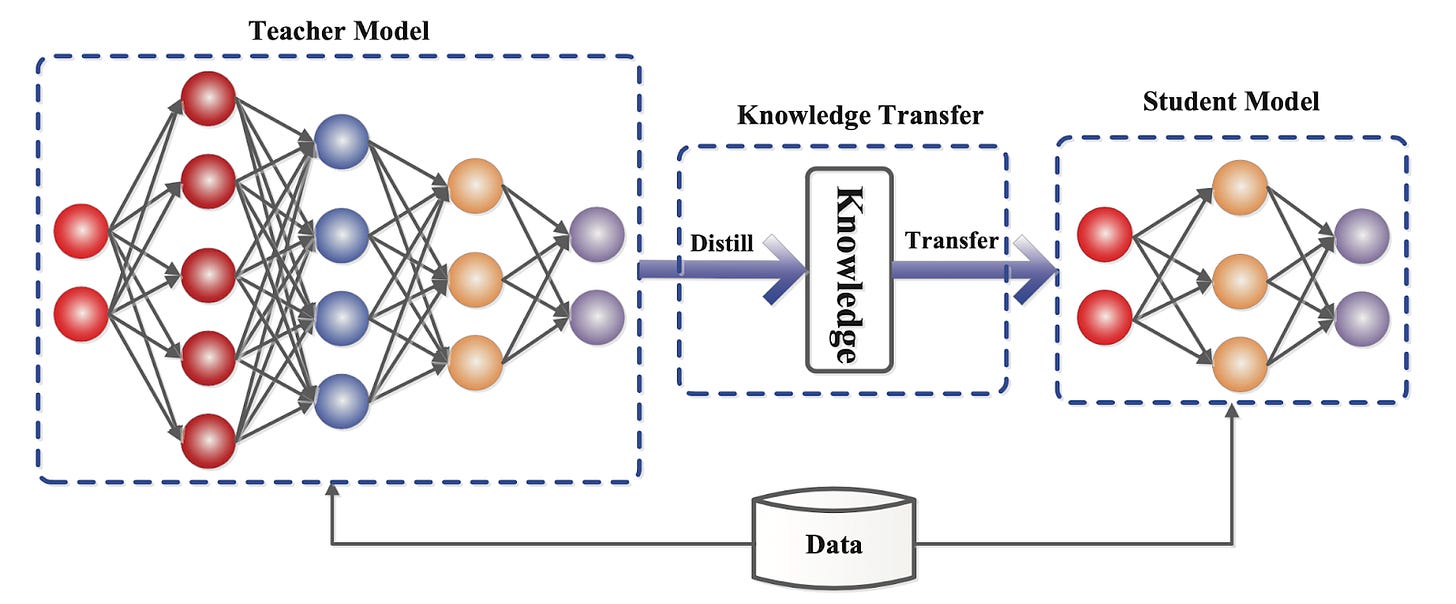

One early way that the data thesis failed was model distillation. Model distillation is a fancy term for AI models being trained on other models' outputs. This might happen intentionally as a strategy for companies to catch up, as OpenAI accused DeepSeek of doing to them in a congressional report. It can also happen accidentally, as more people post OpenAI model outputs to the internet, its style becomes part of the public set of training data. So the gains from specialized data in a model like the first version of ChatGPT became commonplace overnight.

Work through why these examples failed to become monopolies. Reinforcement learning from human feedback, the technique used to create the initial version of ChatGPT, was a genuine breakthrough in customization and UI. But why did that fail to create a moat? The answer is because once that style was crystallized, both in theory and in practice, it became easier to copy, not harder. For a natural monopoly like Facebook, the fact that Facebook already exists makes it harder, not easier, to build a copy of Facebook. Model data is the opposite of a natural monopoly.

As Martin Casado told me in a podcast last summer, “The marginal value of the next bit of data for the leader is actually lower than that for a challenger. So even without the distillation, historically in AI, we've seen this perverse economy of scale where the leaders take a lot more investment to make reasonable gains relative to challengers.”

Now, startups and legacy companies alike are repeating the same mistake as the leading labs. And they are trying to build their moat on a foundation even less stable than ChatGPT’s assistant persona — business processes. There’s a core truth to what they’re doing: it’s very valuable to make the hidden parts of business processes visible to AI models, especially as they become the limiting factor for AI automation.

But start thinking about model distillation and ask yourself for any given process, whether the second company that automates that process has it harder, the same, or easier than the first. The elephant in the room is classic model distillation — if a competitor gets its hands on your model output, your data moat is over. But even without model distillation, there’s only one reason why illegible process data is more difficult than collecting legible data, like we do from the internet or internally in modern companies — we haven’t figured out the system to collect or quantify it. The hard work of a data collection pipeline is in its design, engineering, and debugging. Once those tasks are done and the first version of a software product exists, it becomes easier to reverse-engineer, follow, and improve upon. Process illegibility is not a moat; it is a textbook example of an anti-moat.

To be fair, making this data legible creates a massive amount of value. The incentives for surfacing, collecting, and integrating data can be improved by creating strict legal contracts with large firms or tight patent laws around AI systems, though neither of these solutions are without drawbacks. However, once you’re talking about creating novel legal solutions to properly capture value, you’re already incredibly far from the natural monopoly paradigm.

Data is less moat, more bailey.

Great read. Zooming out, I see a broader pattern where legibility creates coordination, not defensibility. Making a process readable generates value, but it also makes diffusion cheaper for everyone else. The advantage shifts from owning data to controlling how coordination happens around it.

You want an imagining of what the various LLMs are likely to do over the coming decades........https://www.youtube.com/watch?v=quUnYyJg5N0