Doomsday Isn’t Coming, But Politicization Might Be

A New Report From the National Telecommunications and Information Administration

Biden’s NTIA is a bellwether for what a Biden or Harris administration will do on AI. Today, the NTIA released a report outlining a return to normalcy: great news if you worried about a doomsday cult taking over the US government, but bad news if you’re worried about the politicization of AI.

0. Major Risks: A Taste of Sanity

1. Beware Politicization and Racialization

2. A Reasonable Approach to Biological Risks

3. Shut Up About Dual Use

0. Major Risks: A Taste of Sanity

We are so back.

Many techno-optimist commentators [1, 2, 3, 4] are excited about this report. They have good reason to be. The report weighs “marginal risks and benefits” of open-weight models — in other words, a realistic assessment of the ways open-weight models will make the world better or worse.

If you work on AI and see the benefits it is providing to people in real time, this might still sound like both-sidesism. AI doesn’t merely get a barely passing grade on cost-benefit; it passes with flying colors! Remember that the NTIA is a regulatory agency, which often sees their roles as focusing on the negative. Both sidesism is exceptional, especially when their report lists concrete benefits alongside purely hypothetical risks for everyone to see.

Most optimistic to me is the increasing understanding of the “geopolitical benefits”. I believe that distribution of AI is the key geopolitical tool in the tradition of American soft power dominance, a point that has largely been ignored in other agency reports.

China is not Iraq. Thinking that we will have a large, permanent research lead over China is like thinking that sanctions could stop the Soviet Union from making it to space. Instead, the way we build dominance in AI is making sure the best options on the global AI market are made in America. Open-weight models are critical in building that soft power.

In classic agency report style, the NTIA lists three approaches it considers and goes with the middle choice. In doing so, it dismisses near term action against open-weight models, as well as full throated advocacy on behalf of open source.

1. Beware Politicization and Racialization

Many people’s first AI policy memory was when Google released Gemini, a state of the art language model which infamously refused to depict white people.

In original reporting (being the first to quote from the Google Gemini paper’s policy section), I documented precise interventions Google used to create an extremely politically biased language model. The team tasked with Gemini’s indoctrination was “selected based on their expertise across a range of domain areas, including those outlined within the White House Commitments, the U.S. Executive Order on Safe, Secure, and Trustworthy Artificial Intelligence, and the Bletchley Declaration.” In other words, there is a direct link between the political preferences expressed in the Biden executive order and the political bias of Google Gemini.

AI can be seen as a system which literally illustrates the logical extent of strict political preferences. I suspect that if a Republican administration mandates that AI reflects a nationalist worldview, similar extremes would pop up in an ideologically reversed Gemini. An environment free of political or racial pressure is essential to generate positive-sum benefits scientifically, economically, and socially to people of any race, gender, or political affiliation.

On this issue, NTIA report foreshadows a bitter struggle:

“The breadth of the potential impact and the current lack of clear determination regarding how to eliminate bias from all forms of AI models indicates that more research is needed to determine whether open foundation models substantially change this risk.”

And:

“The ability to test for bias and algorithmic discrimination is significantly enhanced by widely available model weights. A wider community of researchers can work to identify biases in models and address these issues to create fairer AI systems. Including diverse communities and participants in this collaborative effort towards de-biasing AI and improving representation in generative AI is essential for promoting fairness and equity. mitigating bias in AI.”

They don’t actually cite anything that makes the argument that “The ability to test for bias and algorithmic discrimination is significantly enhanced by widely available model weights.” In fact, I don’t think this is true. Why? Because historical administrations including the current administration view disparate impact as proof of discrimination. In other words, Google Gemini’s need to depict an excess of minorities in all images, including images of historically white groups or areas, is a natural extension of the racial views of the Biden Executive Order.

The disparate impact standard doesn’t actually require model weights to assess. The AI “discrimination” lawsuit cited in the report was filed against a closed-source resume-screening tool.

A reason why open source is important is that it allows a pluralistic system of free speech and free communication, in which users can modify their models to counteract the ideological biases of their creators. How would someone seeking hegemonic conformism portray this?

There has been substantial documentation of AI models, including open foundation models, generating biased or discriminatory outputs, despite developers’ efforts to prevent them from doing so.

Open foundation models may exacerbate this risk because, even if the original model has guardrails in place to help alleviate biased outcomes, downstream actors can fine-tune away these safeguards.”

I am begging the NTIA not to make Google Gemini’s insane racial preferences mandatory. While conservatives may be disproportionately opposed to this insanity, it really doesn’t benefit anyone in the long term, of any race or political view.

2. A Reasonable Approach to CBRN

Okay … we’re back to praising the NTIA. The national security discussion is often dominated by CBRN (Chemical, Biological, Radiological, and Nuclear) risks of AI, most notably biological risks. Aside from also highlighting benefits to American competitiveness against China, the NTIA report takes a more reasonable approach to assessing CBRN risks.

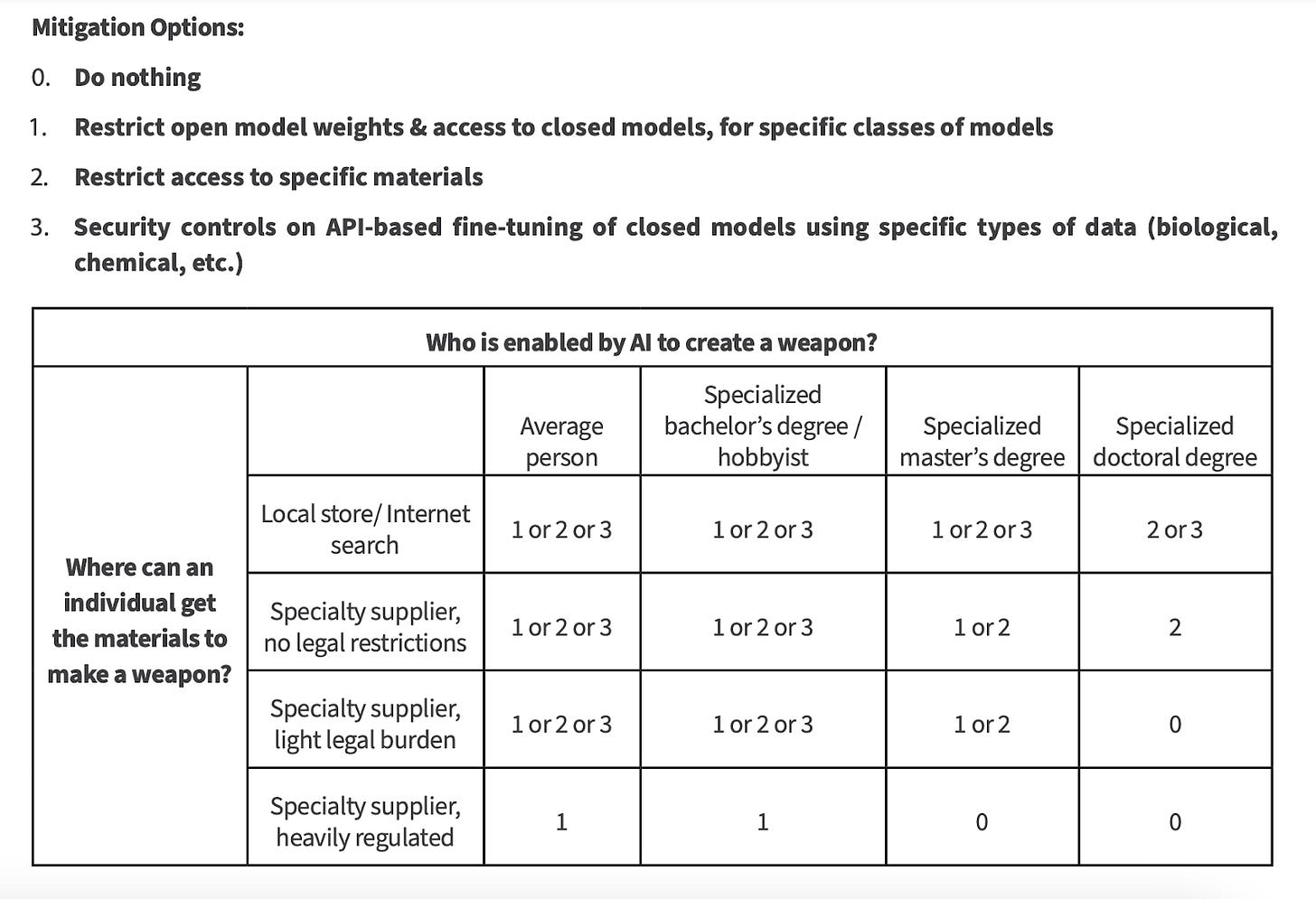

It understands that often, the hard part of making a weapon is not knowing how to make the weapon, but actually making that weapon in the real world. This may seem obvious, but it pours cold water on some of the irrational panic around AI and CBRN thus far. Moreover, they have a nice alignment chart to guide their actions on CBRN:

I have qualms with the exact categorization (for instance, is heavily restricting information really a better solution than changing the supply chain for row three?), but it’s overall a more systematic and well-defined approach to CBRN that takes into account that the most significant dangers posed by CBRN are posed by weapons that are difficult to construct even with cutting-edge scientific knowledge.

This also implicitly offers a gradient view of AI jailbreaks; while it may be impossible to make AI uncircumventable for experts, it is current practice to make CBRN and other controversial information inaccessible for a lay user. Just as you might need a PhD in biology to create a biological weapon, you might eventually need the equivalent of a PhD in prompt engineering to circumvent layers of jailbreaks. A gradient-based assessment of robustness to jailbreaks would be an important step in realistically assessing CBRN risks.

3. Shut Up About Dual Use

Definitions given in the report are unclear and often simply incorrect.

This tens of billions of parameters threshold, which also appears in Biden Executive Order, is a different (albeit lower in almost all cases) threshold than the Biden 10^26 FLOPs (FLoating point OPerations) threshold. It is unclear what scientific significance this parameter threshold has. Moreover, the report confusingly conflates this definition with foundation models in general. It even admits that this conflation is incorrect!

The citation does not support this conflation either, though it does show that “foundation model” as a term is used more broadly.

A foundation model is any model that is trained on broad data (generally using self-supervision at scale) that can be adapted (e.g., fine-tuned) to a wide range of downstream tasks; current examples include BERT [Devlin et al. 2019], GPT-3 [Brown et al. 2020], and CLIP [Radford et al. 2021].

Moreover, dual-use is a category which obscures more than it defines. As I’ve written:

Throughout history, strategic technologies often have both military and civilian uses. We won the Second World War, the cold war, and countless other conflicts by accelerating these technologies, not restricting them.

These ‘dual-use’ technologies are ones we are already comfortable using in everyday life. A transmitter on a phone or laptop is little different than one on a remote bomb. The wires, microchips, and batteries are only slight variations of each other. Cars, planes, and ships all have vast uses in military conflict, but are all crucial to the way of life of every American.

Machine learning comes in a long line of technologies that drastically improves civilian and military effectiveness. This is why policies restricting “dual-use” machine learning models will cost not just dollars, but American lives.

Conclusion

I think other commentators are right to celebrate the NTIA report. It moves many issues in both a more competent and a more optimistic direction. I am slightly more concerned that it is a “return to political normalcy”. In areas such as CBRN, this means a more realistic and non-apocalyptic approach. But in highly politicized areas such as ‘discrimination’, there is a history of political pressure leading to disastrous results.

It is also unclear that aggressively pursuing disparate impact claims against AI developers is electorally beneficial to Democrats. In my view, the news stories caused by Gemini’s left-wing and anti-white biases are more favorable to Republicans. I hope that future administrations can create a bipartisan consensus not to replicate the ideological failures of Google Gemini.

Open-weight models are a pathway to this open, innovative, and pluralist future.

Update: This article has been updated to show that the 10 billion parameter standard also appears in the Biden Executive Order, but does not have a clear scientific origin.

In July 2024, I think even the doomiest of doomers have to concede the current generation of frontier models are not going to cause an existential catastrophe.

As you've observed, the NTIA recommends not restricting current model weights, while preserving the option to do this in the future: "NTIA's recommendation, that the government should not restrict the wide availability of model weights for dual-use foundation models at this time....while preserving the option to restrict the wide availability of certain classes of model weights in the future." That seems pretty sensible and makes room for sensible precaution around existential risk, in my view. My priority would be ensuring that there really is the preservation of an option to restrict weights in the future, when specific widely-agreed risks arise.

There certainly are doomers advocating for the continued protection of current model weights. A lot of that comes down to a desire to set a precedent for future, more powerful models. If there was a consensus framework (like NTIA's sensible CBRN framework above) that set a clear roadmap on when model weight release restrictions could be imposed when models display autonomy, persuasion, and CBRN risks, some of the pressure for current model weights to be suppressed would dissipate.

Strong agree on the politicization!

The articles imply that our collective fate is sealed. On the surface, it appears unavoidable. Certainly, such decisions might result in numerous losses, including lives. Yet, author John Leake offers a glimmer of hope. He posits that humanity possesses the strength to thwart this Marxist takeover. [https://petermcculloughmd.substack.com/p/the-unhumans-are-coming?]. See also this: [https://www.thefp.com/p/venezuelans-are-fighting-for-freedom?]